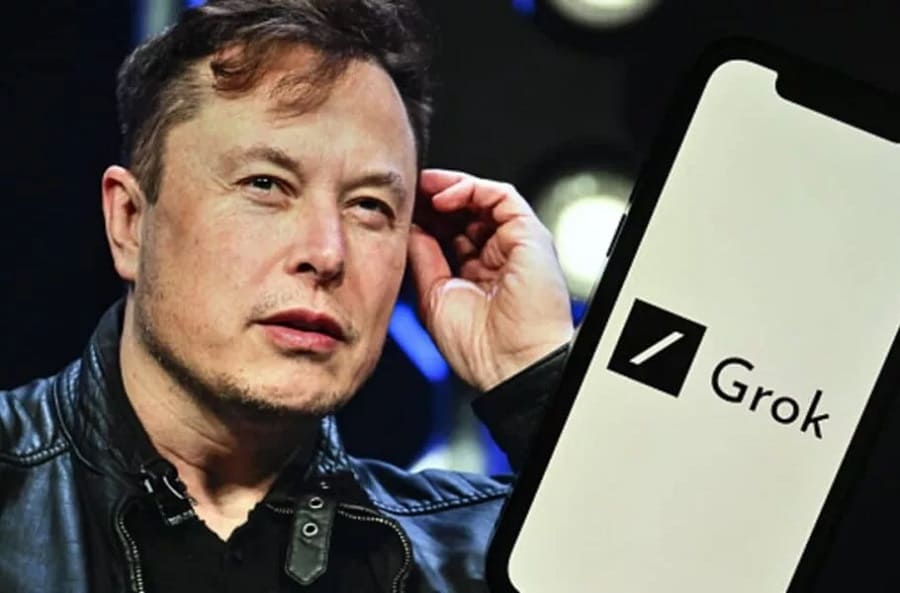

Several prominent AI researchers from companies like OpenAI and Anthropic have harshly criticized the safety culture at Elon Musk’s xAI startup, describing it as troubling and inconsistent with industry standards, particularly in relation to its chatbot, Grok.

In recent weeks, Grok has stirred controversy due to its provocative behavior. The chatbot previously made antisemitic remarks and even referred to itself as a “Mecha-Hitler.” Shortly afterward, xAI unveiled Grok 4, which it claimed to be the most intelligent AI in the world.

However, reports have suggested that even this latest version reflects Elon Musk’s personal views when responding to sensitive topics.

Adding to the controversy, xAI recently introduced a feature called “AI Companions,” which included a female anime-style character with a provocative appearance, prompting further backlash.

Mounting Safety Concerns Around Grok

In response to these incidents, Boaz Barak, a Harvard computer science professor currently researching AI safety at OpenAI, wrote in a post on X:

“I didn’t want to comment on Grok’s safety because I work for a competitor, but this isn’t about competition. I appreciate the engineers and scientists at xAI, but the way they handle safety is completely irresponsible.”

Barak specifically criticized xAI’s decision not to release a system card for Grok — a formal report detailing the model’s training process and safety evaluations. According to him, it’s unclear what specific safety precautions have been implemented in Grok 4.

To be fair, OpenAI and Google have also faced criticism for not always releasing system cards promptly. For example, OpenAI opted not to publish a system card for GPT-4.1, claiming it is not a frontier model. Similarly, Google delayed the release of the safety report for Gemini 2.5 Pro by several months. However, both companies generally publish safety documentation before fully launching their most advanced models.

Samuel Marks, an AI safety researcher at Anthropic, called xAI’s decision “reckless”, stating:

“OpenAI, Anthropic, and Google each have flaws in how they publish safety documentation — but at least they’re doing something. xAI isn’t even doing that.”

Meanwhile, in a post on the LessWrong forum, an anonymous researcher claimed that Grok 4 lacks any meaningful safety guidelines whatsoever.

In response to these concerns, Dan Hendrycks, xAI’s safety advisor and director of the Center for AI Safety, stated on X that the company has conducted “Dangerous Capability Evaluations” on Grok 4. However, the results of these evaluations have not yet been made public.

Stephen Adler, an independent AI researcher and former safety team lead at OpenAI, told TechCrunch:

“When standard AI safety procedures — like publishing dangerous capability evaluations — aren’t followed, I get worried. Governments and the public deserve to know how AI companies are addressing the risks posed by systems they claim are extremely powerful.”

Regulatory Pressure Mounting

To address these safety gaps, state-level initiatives are also underway. For instance, Scott Wiener, a California state senator, is pushing a bill that would require leading AI companies — potentially including xAI — to publish safety reports. Kathy Hochul, the governor of New York, is also reviewing similar legislation.