Artificial Intelligence (AI) has become a major part of our lives—from the way we search for information to how we get recommendations for videos, products, or even translations. Behind the scenes, one critical process makes all of this possible: inference. While the term may sound technical, it simply refers to the way AI models apply what they’ve learned to generate useful results.

This article breaks down what AI inference is, how it works, and why it’s becoming increasingly important in modern technology.

What Is AI Inference?

In everyday language, inference means drawing a conclusion based on known information. In AI, it means something very similar: the AI model uses patterns it has learned during training to make predictions, classify things, or perform tasks.

Think of it like a very advanced form of pattern recognition. For example, when you hear “peanut butter and…”, your brain likely fills in “jelly” based on common associations. AI inference works in a comparable way—by using previously learned data to predict what should come next or what something is.

How Does Inference Fit Into the AI Process?

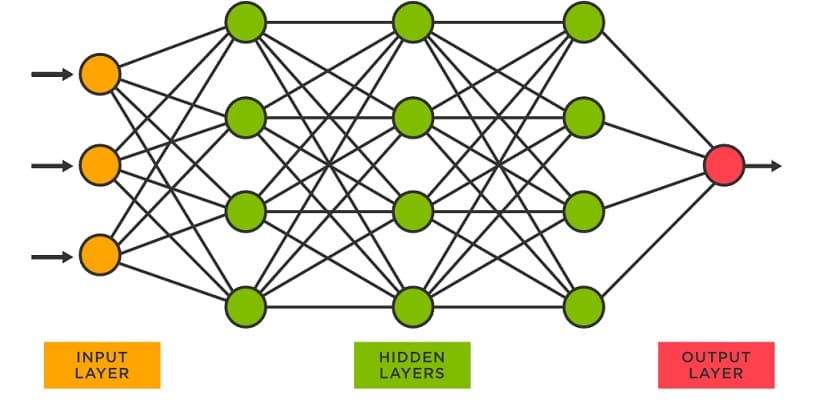

AI models don’t start out knowing anything. They first go through a training phase where they process huge amounts of data. During training, the model learns relationships between pieces of data—like words in a sentence, colors in an image, or sounds in speech.

Once trained, the model enters the inference phase. This is where the model is actually used in real-world applications. For example:

- A language model predicting the next word in a sentence

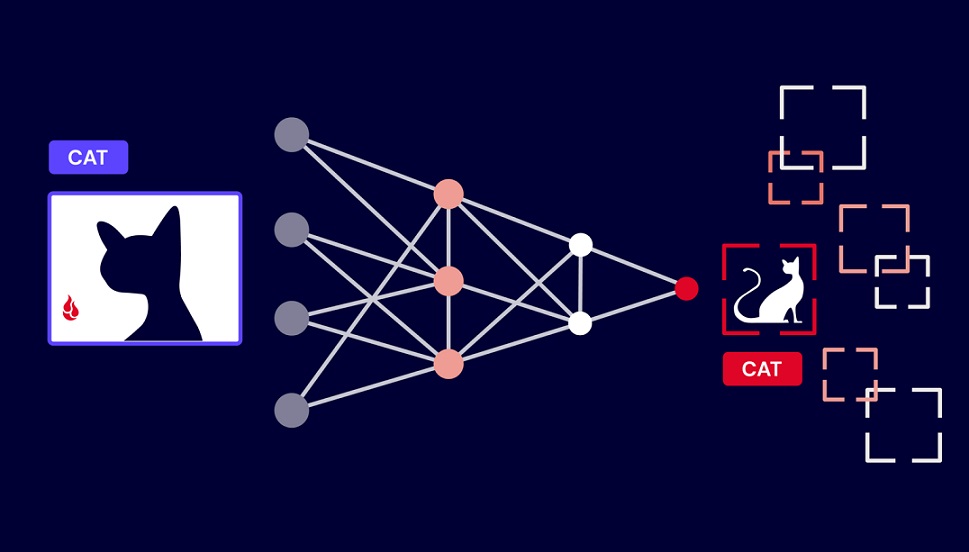

- A vision model identifying a cat in a photo

- A recommendation engine suggesting videos or ads based on your past behavior

Inference is the engine that powers these applications. It’s how AI goes from being a theoretical tool to something practical and interactive.

Real-World Examples of AI Inference

Inference is used in almost every kind of AI you interact with:

- Search engines: When you ask a question in Google Search, AI models infer what you’re really asking and summarize the best possible answers.

- Translation tools: Today’s language translation is powered by AI models that understand context and grammar better than older, statistical methods.

- Image generation: AI can create lifelike images and visuals thanks to improved inference capabilities that understand textures, shapes, and even the laws of physics.

- Recommendation systems: Platforms like YouTube, Netflix, and online stores use inference to suggest content tailored specifically to you.

Even simpler tasks, like identifying whether a picture contains a cat, rely on the model inferring what a cat looks like based on patterns it learned during training.

The Growing Role of Inference

Although inference isn’t a new concept in AI, it’s becoming much more powerful and widely used. In the early days of AI, inference was limited and often inaccurate. Now, thanks to improvements in model architecture, training methods, and computing power, inference is far more reliable.

Modern AI can understand nuance in human language, generate realistic visuals, and even assist in tasks—all using inference. It’s not just about guessing what comes next; it’s about making well-informed decisions based on deep analysis of data.

Improving Efficiency and Reducing Costs

As AI tools grow more advanced, another important focus is making inference more affordable and efficient. Running large AI models can be costly in terms of computational resources. To make these tools more accessible, engineers work on optimizing the inference process so that smaller models can still deliver high-quality results without sacrificing performance.

For example, lighter versions of powerful AI models like Google’s Gemini are being developed with efficiency in mind. This means more people can access advanced AI capabilities without the need for expensive hardware.

Inference in the Future

AI inference is also starting to do more than just answer questions or classify data. It’s enabling AI agents—systems that not only understand requests but can act on them. Whether it’s booking a reservation, organizing emails, or summarizing a meeting, AI systems are beginning to perform tasks on our behalf. This evolution expands the meaning of inference from simple prediction to complex decision-making and action.

Let’s Recap

Inference is what turns a trained AI model into something useful. It allows AI to recognize patterns, make predictions, understand language, generate images, and even take action. As AI continues to advance, inference will become even more efficient, accurate, and central to how these technologies support us in everyday life.

Whether you’re searching for something online, getting a recommendation, or interacting with a chatbot, you’re benefiting from inference—one of the most important and fascinating parts of how AI works.