A new study from Apple reveals that the long “thinking steps” we see from ChatGPT and Gemini might just be an elaborate illusion meant to hide a deeper truth.

These days, almost everyone has heard about AI and its astonishing abilities. Models like ChatGPT, Claude, and Gemini are so good at writing text, generating code, and answering questions that it sometimes feels like we’re interacting with a truly intelligent being. Recently, a new generation of so-called “thinking” models has emerged, often referred to as “reasoning models.”

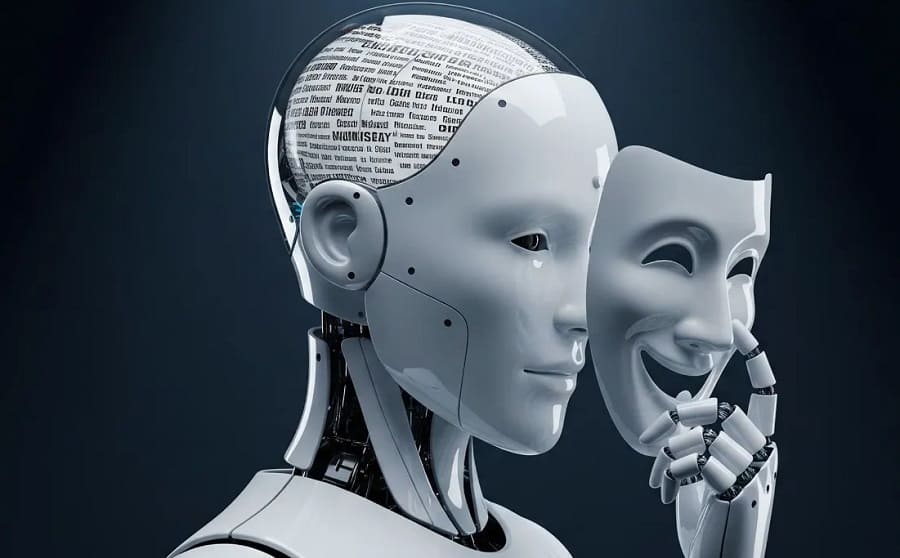

These new versions display long chains of “thought processes” before giving us a final answer, seemingly to prove that they’ve worked through the problem thoroughly. But is this really what’s happening? Are these models genuinely “reasoning,” or are they more like students who’ve memorized the answers and are just putting on a show?

That question lies at the heart of a fascinating new study by Apple researchers, titled “The Illusion of Reasoning.” The findings are so surprising, they might permanently change how you view artificial intelligence.

The AI “Exam Scandal”: What if the Questions Were Leaked?

The first major issue the Apple researchers point out is strikingly similar to a real-world problem: leaked exam questions. Currently, we assess AI’s intelligence using math and programming tests. But there’s a serious flaw: data contamination.

AI models are trained on massive swathes of the internet. How can we be sure the exact same math or coding problem—along with its solution—wasn’t already published online and seen during training? If so, the AI isn’t solving the problem — it’s recalling a memorized answer. That’s like giving a perfect score to a student who memorized every past exam and calling them a genius!

And the researchers proved this in practice. They found that AI models performed worse on a newer math test (AIME25), whose questions were less likely to have appeared online, compared to an older test (AIME24). It’s as if last year’s exam had been leaked, while this year’s was fresh — and the models got exposed! The takeaway: to truly measure intelligence, we need a clean and fair testing ground.

A New Testing Ground: From Towers of Hanoi to Advanced Lego Games

To fix the data contamination issue, Apple’s researchers turned to classic puzzles and logic games. These games have simple rules but require real planning and reasoning to solve. Their big advantage? It’s easy to make them harder and ensure the AI has never seen the exact problem before.

The four games used were:

- Towers of Hanoi: A classic logic game where disks must be moved between three rods.

- River Crossing: You must transport characters across a river under specific rules.

- Block World: A kind of advanced Lego game, where blocks must be rearranged from an initial to a goal layout.

- Checkers Jumping: A simple board game where pieces must be moved according to fixed rules.

These puzzles act like a precision laboratory: researchers can gradually increase the difficulty—by adding just one more block or disk—and observe how the AI responds.

The Results: From Triumph to Total Collapse

The experiments revealed the AI’s performance in three dramatically different stages:

Act I: Simple Problems (When Thinking Hurts More Than It Helps)

In the simplest puzzles (like Towers of Hanoi with 3 disks), something strange happened. The “standard” models—those that didn’t simulate long reasoning—outperformed the “reasoning” ones. It’s as if the added reasoning only got in the way, introducing unnecessary complexity. Like trying to peel a fruit with a sword instead of a knife. This phenomenon, known as overthinking, shows that more reasoning isn’t always better.

Act II: Medium Problems (Where Thinking Finally Pays Off)

As the puzzles got slightly harder, the tables turned. The reasoning models began to shine. Their ability to simulate thought processes helped them solve moderately difficult problems more effectively than the basic models. Here, the long, winding “thinking steps” actually worked—and the computational investment paid off.

Act III: Hard Problems (Smashing Into a Brick Wall)

This is where things took a dramatic turn. When puzzles reached high complexity (like Towers of Hanoi with more than 8 disks), everything collapsed. Both standard and reasoning models failed completely, with accuracy dropping to zero.

In other words, reasoning isn’t some magical, unlimited power—it just pushes the failure point back a little. These models are like race cars that perform well on flat roads but stall completely on mountainous terrain.

The Tipping Point: The Harder the Problem, the Less Effort They Make

One of the most surprising findings was how AI models behave when things get difficult. We humans tend to think harder when we hit a tough problem. But AI does the opposite. Charts showed that while models initially increase their “thinking effort” as puzzles get harder, they hit a limit—and then suddenly give up, putting in less effort as the problem becomes too challenging.

It’s as if a student sees a really tough question in an exam, drops their pen, and says, “Forget it, this one’s impossible!” This odd behavior points to architectural flaws in how today’s AI systems are designed.

Bizarre Behaviors: Even Cheating Doesn’t Help

Digging deeper into the models’ thought traces revealed even stranger limitations:

- Even with the solution in hand, they still fail: In one amazing test, researchers gave the AI the exact step-by-step algorithm to solve the Towers of Hanoi puzzle—basically, a cheat sheet. You’d expect the model to ace it. But shockingly, it didn’t help at all. The AI still failed at the same point. This means the issue isn’t just in “planning” the steps, but in executing them reliably, too.

- Good memory ≠ good reasoning: The models performed well on puzzles like Towers of Hanoi, which have many examples online. But they struggled with River Crossing problems, which are rarer on the internet. This shows their strength lies more in recalling memorized patterns than in logically solving fresh, unseen problems. Like someone who’s memorized a famous poem but can’t write a new verse.

What Does This Mean for Us?

The Illusion of Reasoning study is a wake-up call for anyone relying on AI in daily life without understanding what’s really going on under the hood:

- Don’t be fooled by appearances: The next time an AI gives you a long, “thoughtful” answer, don’t be too impressed. Those reasoning steps may be just a convincing act.

- These models have strong memory, not deep minds: Their core strength is recalling and remixing what they’ve already seen—not inventing completely new solutions through logic.

- True intelligence is still far off: This study shows that simply making models bigger and feeding them more data won’t lead to real, human-like general intelligence (AGI). We’ll need fundamental breakthroughs in how these systems are built and trained.

In the end, this research demystifies AI and shows it for what it really is: a powerful tool—not a magical mind. It helps us move past the hype and approach AI with clearer eyes, recognizing both its strengths and its very real limitations. Behind the dazzling performance of reasoning and thought, there’s still no true mind at work.