Artificial Intelligence (AI) is everywhere now—from chatbots to voice assistants to image generators. But how does AI actually become smart? Does it just look things up in a giant database? Not quite.

Let’s break it down in a way anyone can understand.

AI Is Not a Search Engine or a Database

Many people imagine AI like a supercharged Google, or a giant digital library that finds the right answer stored in its memory. But that’s not how it works.

AI models like ChatGPT or image generators don’t have a list of every possible question and answer. Instead, they learn from examples—just like humans do.

Think of AI Like a Student

Imagine teaching a child how to speak. You don’t hand them a list of sentences. Instead, you talk to them over and over. They begin to understand patterns in how language works: where to place words, what meanings they carry, how to respond to certain questions.

AI learns in a similar way—by being shown huge amounts of data.

For example, to train a language model like ChatGPT, developers give it a massive amount of text from books, websites, conversations, and articles. The AI reads through all this material and tries to figure out how language works. It learns to predict what word might come next in a sentence, based on everything it’s seen before.

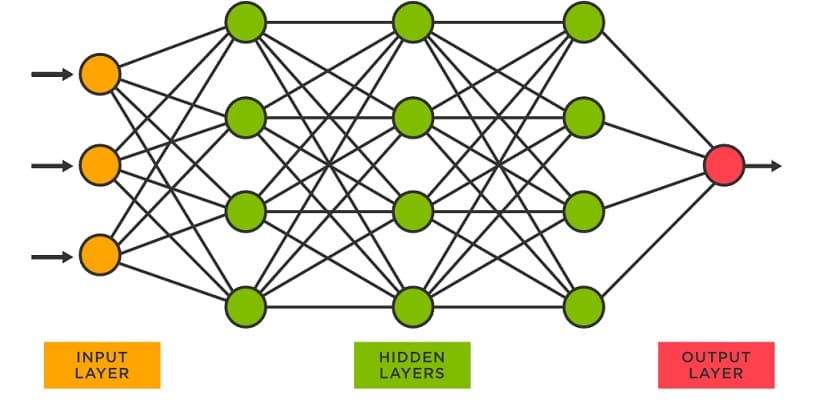

What Does “Training” Mean?

Training an AI model is a bit like teaching a brain. Here’s how it works, simplified:

- The AI starts with no knowledge—it’s like a newborn.

- It looks at millions or billions of examples—like sentences, pictures, or sounds.

- It learns patterns—for example, that the word “cat” often appears with words like “meow” or “furry.”

- It makes guesses—and with each guess, it checks if it was right or wrong.

- It improves over time—just like you get better at a game the more you play.

This process is powered by something called machine learning, where the AI keeps adjusting its internal settings until it gets really good at predicting or generating correct responses.

AI Doesn’t Know Things the Way Humans Do

Even though AI can produce intelligent-sounding answers, it doesn’t “understand” them the way you and I do. It doesn’t have feelings, beliefs, or awareness. It’s just learned how to mimic human language by studying millions of examples.

So when you ask it a question, it’s not pulling a fact out of storage—it’s generating a response based on patterns it learned during training.

Why So Much Data?

AI needs tons of examples to learn properly. The more data it sees, the better it gets at understanding different ways people write, speak, or behave.

Think of it like this: if you only read one book in your life, you wouldn’t be very good at writing essays. But if you read 1,000 books, you’d start to pick up on grammar, tone, vocabulary, and structure. AI learns the same way—by being exposed to a lot of information.

What Happens After Training?

Once the AI is fully trained, it can respond to new questions it’s never seen before. It doesn’t memorize the answers—it generates them on the spot, based on everything it learned during training.

You can think of it like how a chef can invent a new recipe using ingredients they know. They weren’t given this exact recipe before—but they know what works well together.

So… Is AI Actually “Smart”?

It depends on how you define smart. AI can do amazing things—summarize articles, write poems, answer questions, translate languages—but it’s not conscious or self-aware.

It’s more like a really advanced tool that learned from examples. Its intelligence comes from the patterns it found in the data, not from thoughts or feelings.

Let’s Recap

AI isn’t just looking things up. It’s generating answers using patterns it learned from massive amounts of data. It’s not magic—but it is one of the most powerful tools humans have ever created.

By understanding how AI learns, we can better use it—and avoid common myths about what it can and can’t do.